Today, no one is surprised that every device is getting the "smart" label. Phones, televisions, fridges, thermostats, headphones... and now glasses again. The idea is simple: cram a computer, display, camera, and microphones into an everyday item and pretend that it suddenly becomes "more useful." The problem is that it doesn’t always come off naturally.

Smartphones and smart TVs have taken off rapidly. But "smart glasses"? That’s been a tough nut to crack for years. Most people simply don’t wear glasses – some have good vision, and the rest opt for contact lenses. So, who is the target audience? Glasses wearers? Fans of expensive Ray-Bans? Or is it all about transferring phone functionalities directly to the face?

On top of that, there’s the issue of privacy. Cameras and microphones always on – that sounds like a nightmare to many. Google Glass in 2013 even earned the nickname "glassholes" for users who completely ignored conventions and other people's sense of privacy.

What can Ray-Ban Display Glasses do?

The new Meta and Ray-Ban glasses are quite a specific mix. In the right lens, we have a colour display of 600 × 600 px, a 20° field of view, and on top of that, a 12 MP camera, six microphones, and stereo speakers. This is not full AR; rather, it's a small screen that appears when you look slightly to the right.

However, the most interesting addition is the EMG band – the so-called Neural Band. It reads electrical signals from the wrist muscles to recognise hand gestures. In theory, it will even allow you to write in the air – although for now, the function is in "beta" version.

Comparing them with Google Glass: a stronger processor, more flash memory, and faster RAM – but still only 2 GB. Gesture control on the side of the frames is practically the same, and the biggest difference is indeed the wrist band. The catch? Another gadget that needs charging. And if you're already wearing a smartwatch, then it seems you have only one hand left.

The Eternal Problem – Who Needs This Anyway?

Google Glass, Apple Vision Pro, and the whole lot had one common issue: no one knew what they were actually for. Browsing emails or pictures of cats on a small screen? Navigation in the style of "map in the corner of your eye"? It’s all fine and dandy until you have to reach for your phone to make sure you're genuinely heading in the right direction.

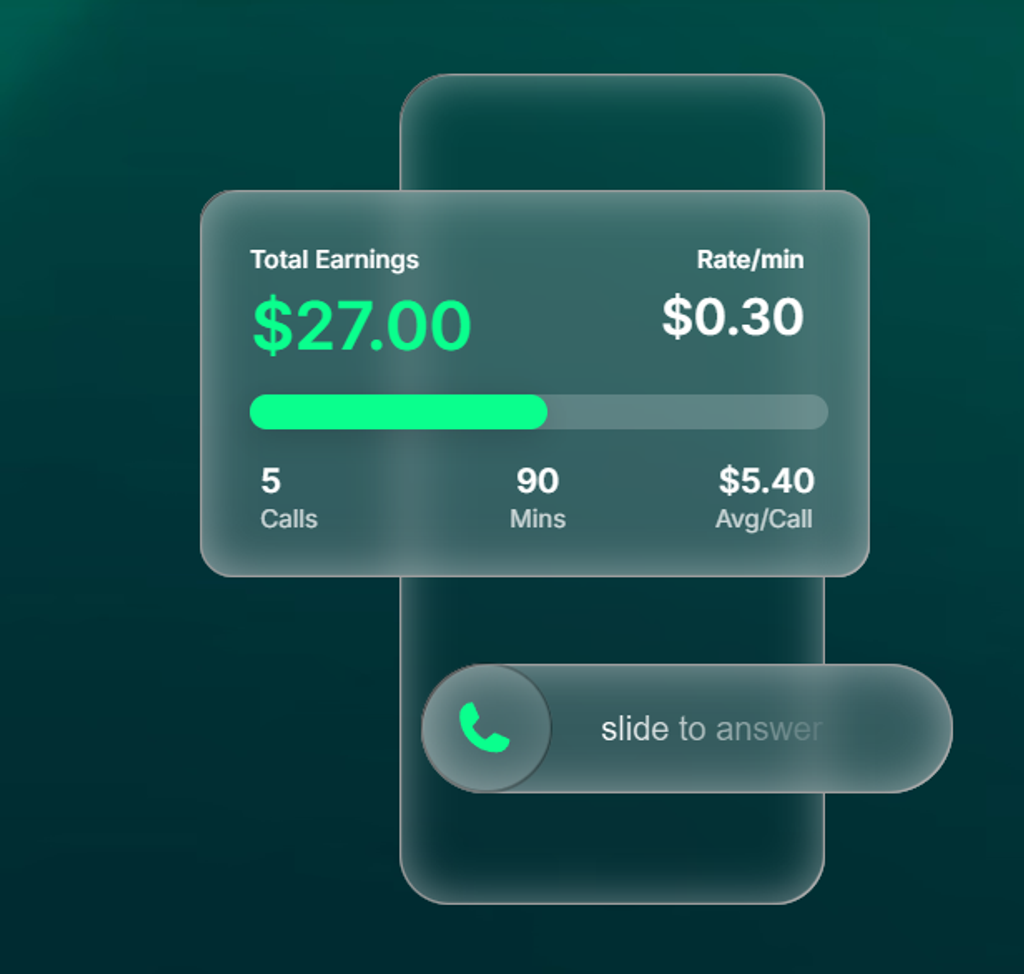

Meta is focusing on integration with Meta AI – real-time language recognition, subtitle translations, quick replies. Sounds good, but a regular smartphone does that just as well, with a much larger screen and better UI.

Old Fears, New Glasses

In the background, the issue of the "panopticon" remains – the feeling that you could be watched at any time and anywhere. Smartphones at least clearly show when someone is recording. Smart glasses? Not always. A tiny LED light? Easy to miss.

It's no wonder that people react nervously. A video appeared on TikTok of a woman who discovered that the person waxing her in the salon was wearing such glasses. It may seem funny, but it's not hard to understand why she felt uncomfortable.

So the problem is the same as with Google Glass: how to reconcile "innovation" with the fact that others feel filmed without consent? And what if these are your only prescription glasses – should you take them off every time you enter a cinema or a museum?

Dumber versions of "smart"

Not all smart glasses need to have a camera. We have, for example, automatically tinted sunglasses or models that act as a second screen for a laptop. There are also typical AR designs that do not provoke such controversy. But it is not them that attract media attention – and it is not them that bear the label "glassholes".

More Foolish "Smart" Versions

Not all smart glasses need to have a camera. We have, for example, automatically dimming sunglasses or models that function as an additional screen for a laptop. There are also typically AR constructions that do not raise as much controversy. But these are not the ones that attract media attention – and they are not the ones labelled as "glassholes".

Katarzyna Petru

Katarzyna Petru